U.S.-based startup SkyFlow has introduced a solution, which helps businesses to use ChatGPT while protecting sensitive and private data.

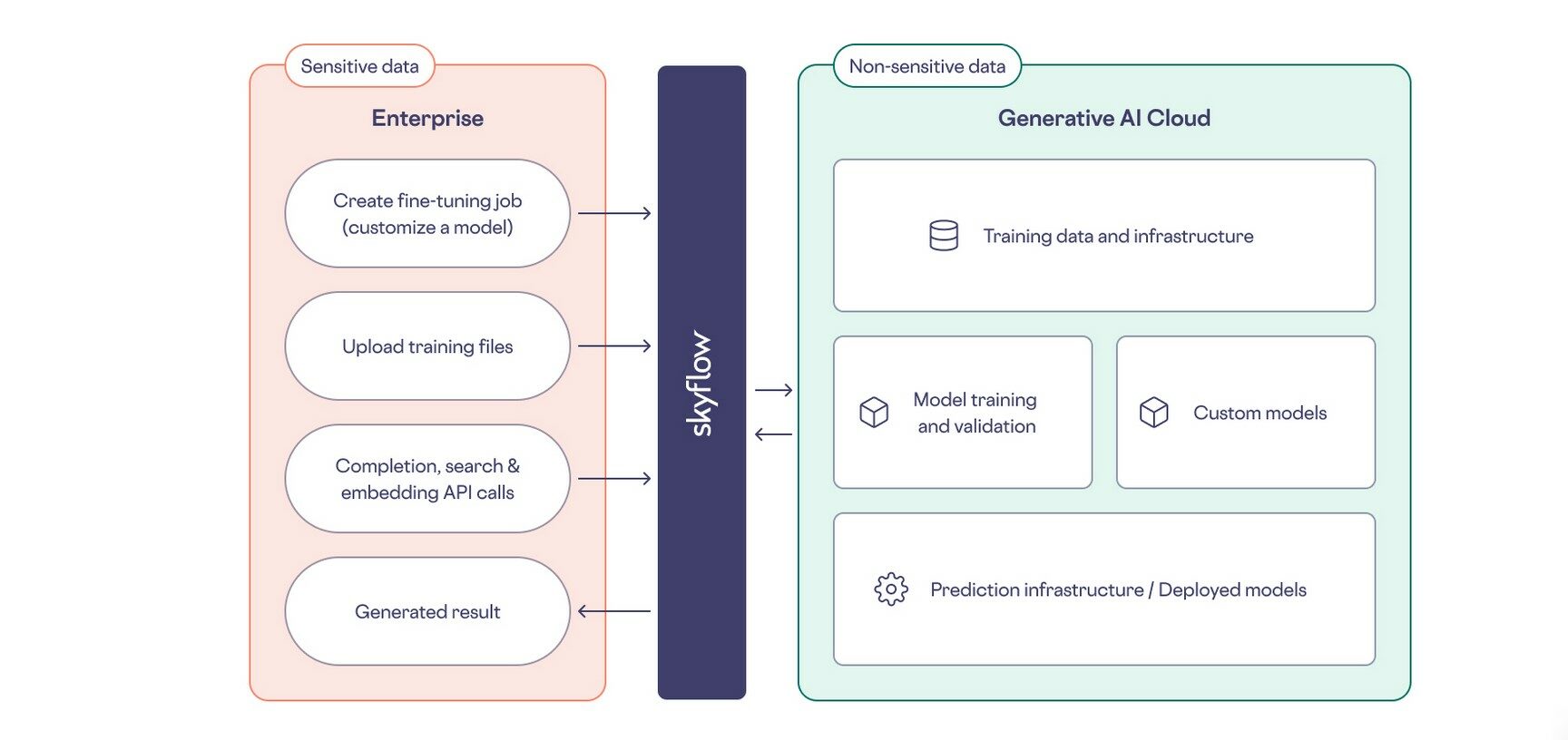

Skyflow has unveiled its latest innovation, the GPT Privacy Vault, aimed at addressing critical privacy concerns associated with the training and use of Generative Pretrained Transformer (GPT) models. This comprehensive privacy solution prevents sensitive data from being inadvertently leaked into GPT models during both training and inference phases, providing an additional layer of security for user inputs and multi-party model training. With this cutting-edge service, Skyflow reaffirms its commitment to safeguarding sensitive information as AI adoption accelerates across various sectors.

“By using Skyflow GPT Privacy Vault, companies can protect the privacy and security of sensitive data, protect user privacy, and retain user trust when using GPT-based AI systems”, the company states in a blog post.

GPT models are typically trained on large amounts of data. Without proper safeguards, it’s easy for sensitive PII or other sensitive data to leak into a model training dataset.

To address this potential risk, all training data flows through Skyflow, where sensitive data is identified and stored within the vault. Skyflow filters out plaintext sensitive data from the training dataset during data ingress to prevent this data from reaching GPTs during model training.